Main branches

These are our main branches. Current development goes into develop, and when we do a release, develop is merged into master. In our TeamCity continuous integration server, there will be a Continuous Integration build that feeds from develop branch and immediately does a build when something is pushed to this branch in the central repository (GitHub). Similarly there is a build that feeds from the master branch and uses our automated deployment system to deploy to production.

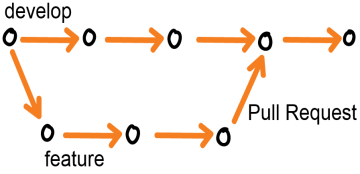

These are our main branches. Current development goes into develop, and when we do a release, develop is merged into master. In our TeamCity continuous integration server, there will be a Continuous Integration build that feeds from develop branch and immediately does a build when something is pushed to this branch in the central repository (GitHub). Similarly there is a build that feeds from the master branch and uses our automated deployment system to deploy to production.We do feature branching. Each feature under development gets it's own branch created, work is done here, and finally the feature branch is merged back into the develop branch using GitHub pull requests.

A feature branch is usually pushed to the central repository (GitHub), which enables more than one developer to work together on a feature, and also enables ad hoc peer-to-peer code sharing. Because switching branches is easy in Git, a developer can easily stash her current work, switch to the feature branch of her colleague, assist on any issue, and later switch back.

One of the challenges of feature branching is keeping in sync with the develop branch, because it will typically move forward in time, while any feature branch is based on the state of the develop branch at the time of the feature branch's birth. We are using our pull request-based review workflow to ensure that the developer in charge of a feature is also responsible for resolving any merge issues:

- Developer finishes feature, makes pull request

- Reviewer uses GitHub to do the review.

- GitHub will tell if the pull request cannot automatically be merged (i.e. cannot be merged back into develop branch without conflicts). In that case the reviewer will ask the developer to do a “reverse merge”.

- A reverse merge means: From the feature branch, merge latest develop into it. Resolve any merge conflicts. Finally push the reverse merged feature branch.

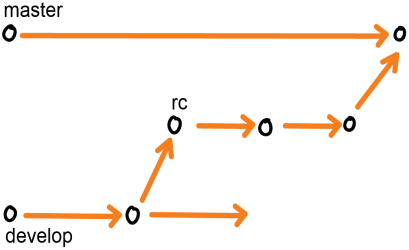

Release Management

Our release management process includes a release candidate branch (rc). When we are preparing the next release, we merge the development branch into rc.

Now any stabilizing of the upcoming release is done on rc. We typically let our build server feed from the rc branch to a build that also makes automated deployment to an rc area, where the project stakeholders can preview, test and verify the upcoming release.

We use the branching to rc to free the develop branch, so work on the next version can immediately start, without depending on the release to be finished.

Once rc is considered stable, we merge to master, which by definition is a release (again, our build system has a build that feeds from master and deploys to production). We also merge back any changes done on rc to the develop branch.

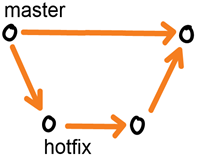

Hotfixing

Whenever a bug is discovered in production (yes it happens even to us), we do hotfixing directly on the master branch.

The hotfix is done just as we do feature branches, except it branches directly of the master branch. Once done and merged back into master, we also merge it into develop to keep things in sync.